Program Notes

Mechanical Techno / TidalCycles hybrid set by G. Dunning

Graham Dunning uses TidalCycles alongside his Mechanical Techno contraption in an unconventional hybrid

performance, which allows room for error, inconsistency and play. The performance highlights similarities

in visual and sonic presentation between the two different approaches.

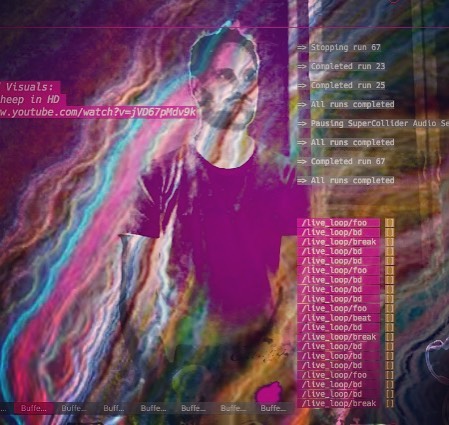

Coding in atypical places by M. Cortés and I. Abreu

Live cinema coding, experimental music and motion typographics of the code edited live. Cinemato-graphic and

sound collage of unfinished expressions of the idea of the future in Mexico City. The project is created with

the algorithmic possibilities of TidalCycles and Processing, the premiere of this live act took place in the

last edition of Mutek Montreal 2018.

Audiovisual Roulette by Cybernetic Orchestra

The Cybernetic Orchestra is a participatory live coding ensemble that employs a shifting and heterogenous

mix of languages and configurations to make music. Multi-linguality and collaborative interfaces and practices

have become definitional for the group. For ICLC 2019 we propose a double roulette in which orchestra members

will take turns modifying either notations that produce music or notations that produce generative visuals

in conjunction with that music. Our performance will use the Estuary web-based platform, and showcase new and

developing features of that platform, including but not limited to: the Punctual language for live coding

synthesis graphs, new notations for generative visuals, graphical interfaces that blur the line between text

programming and GUI-style interaction, responsive and assistive displays, etc.

Safeguard

Safeguard is an autonomous live-coded performance. The performer is a machine learning algorithm, Cibo, trained

to perform (read, change, execute, and repeat) Tidal code. Cibo was trained on sequences of Tidal code, in this way,

the implementation of the algorithm has no knowledge of sound. This separation of code and sound resulted in a trained

algorithm that recognizes how a human performer would change their code from the human’s perception of sound. Even

at this early stage of development several intriguing personified occurrences have cropped up. Further details,

implementation methods, and philosophical musings of this research are in our paper submission.

We gratefully acknowledge the support of NVIDIA Corporation with the donation of the Titan Xp GPU used for this research.

Street Code

Street Code is a series of performances of live coded electroacoustic music in public, half public, and/or open

space. It is about taking electroacoustic music from its usual venues and bringing it to unexpected places where

it can enter in dialogue with the city space, both physical and social, and with the architecture of the city.

Finding nice spots to play where the sound could be interesting, and the projection of the codes painting the

walls with musical scores of light created on the fly is what Street Code is all about.

Street Code is a performance for one or many live coders, for a stage, for a tree, for a street, for an alley,

park, or subway station. With a huge sound system or a small usb speaker and for any duration of time.

Círculo e Meio (Circle and Half) by J. Chicau and R. Bell

An Audio-Visual Live Coding Performance Combining Choreographic Thinking and Algorithmic Improvisation.

This piece choreographs the movements in graphic and sound design carried out by human and non-human performers interacting

on themes of hybridity, circulation, and the permeability of language. By adding software agents, the piece expands a

previous composition for two improvising performers, one live coding a browser window and another live coding audio, which

used the circle as a base choreographic concept for how we perceive relationships between users, software agents, the

computing environment, and society at large. We also extended a shared vocabulary to include these concepts and have

moved from strictly dynamic percussive sounds and vocal that evoke sensations of vibration, rhythm, fast movement to

include fluid-like sounds and images, harmony, gradients, mixed non-linear horizontal and vertical arrangements, and

other related elements.

The length of the performance has been extended from the originally presented at the International Live Interfaces

Conference 2018 [1]; and will be excerpted to match the constraints of this conference.

SquareFuck by M. Caliman

A performance that extends the live coding act to outside of the software IDE, by integrating real time assembly and

manipulation of electronic circuits to the traditional “show us your screen” code display, while live coding Arduino

boards and physically manipulating speakers. squareFuck unveils a process of failure. The inability to perform a task is,

ultimately, the unwillingness to leave things behind.

Off<>zzing the CKalcuƛator by A. Veinberg and F. Noriega

The CKalcuƛator is a lambda-calculus arithmetic calculator for the piano. It is the fourth sub-system of the CodeKlavier

project whose goal is to become a domain specific programming language that enables a pianist to live code by playing

the piano (Veinberg and Ignacio 2018).

In this performance, duo Off<>zz - Felipe Ignacio Noriega (live coding) and Anne Veinberg (piano), will give a performance

in their standard formation of live coding in SuperCollider and piano playing but with the adjustment of Veinberg using

the CKalcuƛator system and thus composing and evaluating simple arithmetic operations and number comparisons with her

piano playing. The results of these operations are broadcast via OSC, enabling the laptop to utilize them as a

combination of number values, boolean switches, audio input and conditions for the live coded algorithms.

Etudes pour live coding a une main by M. Baalman

Typing is one the main physical skills involved in text-based livecoding. The ‘classical’ interface for this is the

‘qwerty’-keyboard for 10 finger blind typing. In search for an alternative interface allowing to get away from the

laptop, but still code via a text interface, I came across the commercial Twiddler one-handed (twelve-button) keyboard.

To learn how to use it, I needed practice materials to study the chording for writing code and created an interface that

allows for playback of recorded livecoding scripts, which I then need to type and execute line by line. The interface

is available at https://github.com/sensestage/SuperColliderTwiddler.

At this ICLC, I will perform from a script of RedFrik’s performance “Livecoded supercollider, well practiced” at the

LOSS Livecode Festival in Sheffield on July 20th, 2007, as found on Fredrik Olofsson’s website

(https://www.fredrikolofsson.com/f0blog/?q=node/155). To make the script suitable for performance in 2019 within the

etude-environment, minor adjustments were made in the code to ensure that it would execute and to reduce the line

length to fit into the graphical interface.

Moving Patterns by K. Sicchio

Moving Patterns is a duet for dancer and coder. It allows a visual score for the dancer to be created in real time

through the Haskell based live coding language TidalCycles and special image language DanceDirt. The audience sees the

performers react and change to the score as it develops through coding and movement on stage.

Scorpion Mouse: Exploring Hidden Spaces by J. Levine and M. Cheung

Scorpion Mouse is a duo that explores how algorithmic music and a live vocalist can have a symbiotic relationship and

improvise together. May improvises over the music livecoded by Jason. Jason gets his phrasing cues, dynamics, and transitions

from May’s singing. Similarly, information from Jason’s livecoding system is sent to May’s digital voice processor so that

rhythmic and harmonic effects are in time and in key with the livecoded music, while the amplitude May’s voice affects the

amplitude and filtering of the livecoded beats so that there is space while she is singing, and so the beats are full force

when she is not. In this way Scorpion Mouse are free to explore vast musical territories while maintaining organic unity.

Musically, Scorpion Mouse has influences from electronic styles like trance, drum and bass, IDM, and ambient as well as

influences from electric styles like jazz, soul, rock and pop. Their music is built from three layers: On the bottom, rhythmically

complex percussive beats. In the middle, lush synth harmonies, and on top, ethereal melodic vocals.

Transit; is worse than the flood by J. Forero

Transit is an audiovisual work for live coding. Transit explores computer and cultural processes, which are created and

destroyed from their definition, in words or codes, and are transformed in time, by their diverse interactions. The work

is inspired, in particular, in Lakutaia Lekipa ́s life, known as the last woman carrier of the Yaganes language and

traditions, pre-Hispanic culture of the extreme south of Chile and Argentina. Thus, in a poetic exercise, the writings

codes carry messages for the execution in real time of the sounds and graphics that build the multimedia sequences.

Supercontinent by D. Ogborn, S. Knotts, E. Betancur, J. Rodriguez, A. Brown and A. Khoparzi

SuperContinent is an intercontinental live coding ensemble, with one member per continent. The ensemble rehearses online,

via the evolving Estuary platform for collaborative live coding, bringing together artists of quite different

sensibilities and exposing the distinctive requirements of live coding ensembles that are highly and definitionally

geographically distributed. Our ICLC 2019 performance will bring together the live coding of patterns, the live coding

of synthesis graphs (via the new Punctual language for live coding), and the live coding of generative visuals.

Live coding with crowdsourced sounds & a drum machine by A. Xambó

The access to crowdsourced digital sound content is becoming a valuable resource for music creation and particularly

music performance. However, finding and retrieving relevant sounds in performance leads to challenges, such as finding

suitable sounds in real time and using them in a meaningful musical way. These challenges can be approached using music

information retrieval (MIR) techniques. In this session, the self-built system MIRLC (Music Information Retrieval Live

Coding), a SuperCollider extension, will be used to access audio content from the online Creative Commons (CC) sound

database Freesound. The use of high-level MIR methods is possible (e.g., query by pitch or rhythmic cues). This live

audio stream of soundscapes from crowdsourced audio material will be combined with a vintage Roland MC-09 used as a drum

machine.

Livecoding temporal canons with Nanc-in-a-Can by A. Franco, D. Villaseñor and T. Sánchez

This performance will showcase the creative possibilities of Nanc-in-a-Can1, an open source SuperCollider

library that we recently developed. Its main focus is the creation and modification of temporal canons (pioneered

by Conlon Nancarrow) in a live and interactive setting. We will be exploring the formal and rhythmic aspects of the

temporal canon form as well as the application of canonic data to sound synthesis and graphics generation, in order

to generate timbral canonic structures that would be “voicing” our pitch based canons, thus generating canons

within canons.

Contemporary Witchcraft by S. Knotts, S. Bahng and K. Cas

...that Enchantress who has thrown her magical spell around the most abstract of Sciences and has grasped it with a

force which few masculine intellects (in our own country at least) could have exerted over it. (Babbage, 1843)

Contemporary Witchcraft is a performance project by Project Group: a multimedia inter-continental feminist performance group.

The performance combines sound, video and movement in a reflection on the interweaving of narratives on programming, women's

bodies and mysticism. The performance is telematic with each performer located on a different continent: in Australia, Spain

and South Korea.

Each performer transmits a different medium which the others respond to, to develop an improvised performance. Knotts live

codes music, Cas uses motion tracking devices to transmit movement data and Bahng interweaves archive video footage with

live video streams of Knotts and Cas performing. Cas and Bahng’s video streams are projected in the performance space

alongside the code interface and audio of Knotts.

Narrative threads on programming, mysticism and digitally mediated embodiment act as stimulus to the improvisation,

freely interwoven, meshed, meeting and diverging. The performance is condensed framework, a meeting of mediatised

cultures, a relation between three mediated bodies distributed in space in a durational awakened process.

iCantuccini present: The Mandelbrots Experience by Benoit and the Mandelbrots

iCantuccini plays ‘Greatest Hits’ only! In The Mandelbrots Experience they try to capture and recreate the original

spirit of the live coding boy band Benoît and the Mandelbrots in an unforgettable live show, performing all their

rememberable tunes from The Mandelbrots 2016 Release. Get vegetal with Gurke (Germanfor ‘carrot’) and enjoy the bright

future envisioned in Hello Tomorrow.

Move your bodies to Tranceposition and Tranceportation (which sound awfully alike).

Enjoy the retro-futuristic sounds of Classic or experience a stroll through the Mandelbrots’ hometown with Karlsruhe (‘Charles, remain!’).

On top of the classic sound you’ll get the classic Mandelbrots look, the authentic stage outfits and the same visuals backdrop

behind the code projection The Mandelbrots used without a change since 2012.

As iCantuccini cannot perform their full show at ICLC they will play a medley of the audience’s two favorite tracks.

Select your favorites until under this URL and listen to the live coded recreation by the first Mandelbrots cover band

ever (voting closes 5 minutes before the show).

A Nice Dream In Hell

I propose a performance using extended TidalCycles techniques to create warped, breakneck rave-inspired electronic music.

Conflicting Phases

Our band is called Kolmogorov Toolbox, named after a soviet mathematician who had remarkable contributions to

information theory and computation complexity. We are two enthusiastic computer science engineers and musicians

from Hungary. We create algorithmic and generative music, primarily through live coding. During our sessions,

we use FoxDot to generate music, and Troop to work on it simultaneously. The act is pure improvisation,

not relying on any pre-written code.

Algorave 100 fragments

In this performance, I try to unite the audio and visual stimulus together.

I wrote 100 kinds of shader (GLSL) animation and put them into the application which builds with openFrameworks. This application selects one

shader animation when detecting the note on the timing of TidalCycles via OSC. So when I perform live coding session with TidalCycles,

this system makes synchronized complex animations automatically in real-time.

AMEN $ Mother Function

One sample, one function – a live-coded, single function demolition of one of the most ubiquitous samples in modern

music. This performance work is both an essay in conceptual minimalism and an attempt at filling the dance floor with

the aid of one wavetable and a little maths.

Entirely created within the context of a single, pure DSP function running at audio rate, the performance

starts with a sawtooth LFO reading from a wavetable filled with the Amen break (represented by $). Through

the course of the performance, the Amen break is gradually unmade into new rhythms, melodic and synthetic

sounds. Working with a single sample at a time, and eschewing recursion and randomness, provides a restrictive

but rewarding creative challenge.

AMEN $ Mother Function had its genesis at the Algorave at ICLC 2016, and a conversation about the large number

of samples used in some performances, as well as the popularity of functional programming in live coding.

It inspired me to pare back, start working with a single sample and a single function, seeing how far these

minimal means could be pushed in creating a musical performance. Since Spring 2017 this work has been performed

at various events across Europe, continuing to evolve each time with new elements and many chance mistakes.

Codie

We are a live coding trio, making music and visuals. Our performances are A/V shows will electronic sounds

and video projections. We perform by creating our works live and anew for each performance. Our sounds are

dancey and our graphics are SoCal. Sarah’s visuals are coded with her own Clojurescript-Electron app called

La Habra. She creates patterns and abstract shapes that animate and move to the rhythm. Kate and Melody’s

music is created in the Sonic Pi. They manipulate and layer samples with algorithms to create the soundtrack.

We type and improvise as we go, reacting to each other’s output and the audience’s reactions. In accordance

with the Live Coding Draft Manifesto, we project our code as we go, so the audience can inspect and marvel at

the changes and accumulations.Codie has appeared at LiveCodeNYC Algoraves and other A/V events in New York City.

Oscillare

My program, Oscillare, uses Haskell to livecode TouchDesigner. I performed at the 2017 ICLC in MX,

but my system, inputs, and output have changed a lot since then. Oscillare now contains input support for a

clock sync with other performers, live pressure sensitive drawing input, and I’m working on adding arduino

sensor support. Outputs have expanded from just projections to any DMX setup that the venue provides.

Augmented Reality is a topic of interest at this year’s ICLC, and I want to augment reality by adding multiple

inputs/outputs all going through a central live-coded hub and moving the visuals away from strict

code -> projector.

M4LD1TX 5UD4K4

M4LD1TX 5UD4K4 is an audiovisual algorave session of voodoochild/ based on live-coding sequencing MIDI via

TidalCycles, Text-to-Speech in SuperCollider and audio-reactive visuals developed in Processing and controlled

via OSC.

M4LD1TX 5UD4K4 is a series of real-time compositional exercises based on elements taken from Latin American pop

culture with a retrofuturist look that references synth-pop as a post-apocalyptic folklore, pretending to be

both protest song and dance music at the same time.

In M4LD1TX 5UD4K4 live-coding operates as an exercise of reappropriation, dialogue and update of the memory in

the "now". From the tensions and permanent contradictions between the local and the global, between the

anachronic and the current, the hi and the low fi, etc., the performance leads through code as denaturalized

language to a visual and sound experience that is articulated from the intimate and emotional; the code, digitized,

visible, is considered as another "lyric" layer of the performance, and the live-coding in its displacement is

proposed as a posthuman song.

M4LD1TX 5UD4K4 attempts to juxtapose imaginaries in an exercise of articulation of affective and territorial

memory. This way, to images of flag patterns, the wipala, 8-bit street riots, or the inverted world map, are

added the lyrics and arpeggios of songs of Los Prisioneros, Víctor Jara or Virus as part of a dystopian

iconographic and musical exploration.

.superformula

The performance for live visuals is live coded with Processing, using the REPL engine to edit on the fly

the code and change it without recompiling it, to make the performance live coded without interruptions. The

visuals will be based on math formulas, trigonometry, hypercubes, supershapes, and will be synchronized live

with the music playing and with the devices of the audience, through a web app and a Node.js server.

VISOR

I propose to perform the visuals for an algorave session at the conference. I will be performing with Visor, a new

live coding environment for creative coding of visuals in live performances. Live coding is performed in the Ruby

language and graphical capabilities are provided by Processing. Visor utilises user interfaces to supplement live code

to allow for example: manipulation of the program state, or visualisation and interaction with real-time audio reactive

techniques. I have been working on Visor as part of my ongoing research into how live coding and VJ practice can be

combined.

TecnoTexto

For ICLC 2019 I propose TectoTexto, a live coding performance with SuperCollider in which I will write and

rewrite rhythmic patterns (Pdef) in order to control the synthesizers (SynthDef) of my instrument library (all

synths are written by me, except the clap synth authored by otophilia). The performance begins writing rhythmic

patterns of percussive sounds and tune notes from the scratch. Occasionally I add previous written patterns which I

select depending on the concert situation.

Besides my synths library I use the SuperCollider class tecnotexto.sc which I have developed based on

requirements and questions coming from my practice of live coding. I am interested in the possibilities of

high and low levels of the programming languages. In the case of high level to perform and interact with code

in a live situation, and in the case of low level the preparation and development of instruments and classes.

For the moment, the class TecnoTexto allows me to call my synths library, test sound and call some objects.

Algorave Set

For this Algorave set, Olivia and Alexandra will team up to create an audio visual experience of

algorithmic/broken/textural techno. Alexandra plays her music using TidalCycles and connects with Olivia,

who makes her visuals with Hydra, a software she wrote herself.

TYPE

We work collaboratively in a shared text buffer using software called Troop where each member of TYPE

constructs portions of music-generating code before interacting with the work one another has left behind.

By making and unmaking each other’s code TYPE works both together and against each other in the exploration of

sound and rhythms. The Troop software will allow us to perform over the internet with two performers connecting

remotely from the UK.

Class Compliant Audio Interfaces (CCAI)

At algoraves, live code visualists and musicians often do unplanned collaborations. This is a challenge as

there is little opportunity for communication, especially as musicians are generally too focussed on their code

to appreciate the visuals, which are in any case often projected behind them. A running joke is that a visualist

might find themselves approaching a musician to ask, “do you mind if I do visuals for you?”, but a musician does

not think to ask a visualist “do you mind if I make music for you?”.

We will use this performance as a test-bed for closer collaboration, using techniques such as live data sharing

over the OSC protocol, giving visualists access to high-level audio parameters, and displaying the live coded

video on the live coding musician’s screen, behind their code. The aim is to achieve the feeling that the

visualist and musicians are following each other, in a balanced performance.

Roosa Poni

Roosa Poni is a collaboration between vocalist Delia Fano Yela and sound artist Alo Allik. This improvised

audiovisual performance explores the connection between different vocal styles and live coding in an algorave

context. Delia’s vocals ranging from funk and soul to raw laments are processed and woven into the fabric of

live coded drones and rhythms of noisefunk. Noisefunk is a musical style that is derived from analysis of

traditional rhythm patterns that have been stripped down to their most essential skeletal structures and

different patterns are juxtaposed during performances to create long complex cycles and unpredictable

syncopation while still maintaining a clearly discernible downbeat in order to undermine the dominance

4/4 time signature of electronic dance music. The sonic material for these rhythm patterns is developed

with evolutionary algorithms that evolve complex synthesis graphs. The live input is processed with the

aid of machine listening processes to follow tonal, temporal and spectral characteristics of the voice

and use this information to control synthesis parameters. The generative visuals, based on artificial

life algorithms, are also affected by live audio analysis.

TidalStems

This algorave performance will demonstrate a new and experimental Haskell library for mixing between short

TidalCycles compositions that have emerged from several hack-pacts. A hack-pact is a Live Coding practice in which a

group of participating members hold each other accountable for producing something with code on a regular basis. Often

hack-pacts produce rich libraries of pithy (30s-90s) mini-compositions that briefly explore one musical or theatrical

idea. The TidalStems Haskell module provides an interface to both archive and recall Tidal compositions such as those

generated from a hack-pact. A Tidal composition can be encoded according to the TidalStems type structure as an

indeterminate list of musical ‘phrases’, where each phrase consists of a list of ‘stems’ (borrowing the term from

music production). A stem is represented as a tuple correlating a descriptive category for the stem (such as Drums,

Melody, Chords, Bass, etcetera) with a Tidal pattern. The TidalStems library provides accessor functions to play a

particular phrase or stem from a Tidal composition, offering an easy interface for quickly mixing between Tidal

compositions. For instance, to play the bass line of the 3rd phrase of an archived Tidal composition, the performer

could enter:

d1 $ getStem “my-song” 3 Bass

While the archived compositions are pre-programmed, the performer improvises their curation of compositions,

phrases, and stems, and explores new combinations of the components of different musical ideas. Furthermore,

pre-programmed compositions can be altered by combining them with additional Tidal patterns (such as through

use of the Tidal “#”, “|*|”, and “|+|” operators).

Luuma

The algorave set will focus on a mixture of acid techno, breakcore and noise music. I will be using a live

coding library that I have been developing and testing in algoraves over the past few years. The library is

built within SuperCollider, and offers tools to build malleable music that stretches across different time

variations, with a meta-sequencer that sequences tempo and time signatures. The library also features modular

style continuous sequencing methods, using combinations of pulse waveforms at varying frequencies, phases

and offsets to build up patterns. I use a QuNeo controller to record and map control sequences during

performance. If circumstances permit, I will also bring a newly developed feedback instrument that is

designed to act as a non-linear controller for sound synthesis parameters, which can be integrated into a

live coding environment.

fiestaPirata.com

We propose an Algorave performance where the aesthetics of fiesta sonidera and from-scratch livecoding

will collide.From guaracha cumbiambera to 3D primitives, tropical hardcore, and video-memes, the audience

will experience a mixing of sound/video manipulated through both sampling and synthesis techniques. The tool

to achieve this is Orbit 1, an OpenFrameworks/SuperCollider live coding tool, recently developed by the RGGTRN

member Emilio Ocelotl. The aim of this performance is to achieve a comprehensive aesthetic where the sound

is not separated from the visuals and vice versa.

Dead letters

A live coding performance, from scratch, using INSTRUMENT, a library developed by myself,

integrated with two other libraries for Supercollider (CaosPercLib + nanc-in-a-can) developed by music

hackers from the Mexican comunity. The intent behind these two livecoding tools is to provide a musical

way of approaching coding without leaving the SuperCollider environment, which allows a musician to work

with synthesis and musical language in parallel. Rhythm, harmony, timbre, and melody are the four musical

realms that would normally require a big effort to combine. Thanks to these technologies, a musician is

able to compose live electronic music on the fly, with all resources available at your fingertips.

Improvisation

In this algorave performance, I plan to perform with at least four conductor agents. In previous performances,

I have only used one or two conductors. This will be my first public performance with more than two conductors,

which should make for a frenetic sound, but hopefully one that is still organized and interesting. Previously,

the conductors have affected which voices are playing and modified one of the rhythm patterns. In this performance,

I intend to use additional conductors to create variations of the current sample set, change sample sets entirely,

change density levels, change the type of density pattern, or other aspects of the system. This is part of ongoing

research which I am preparing for a paper describing the agent system which I use to carry out my performances.

Based on this performance and those to take place shortly after, I hope to gather enough experience and feedback

from experts to be able to bring that research into publishable form.

Surface Tension II

Surface Tension II is a live coding performance by codepage (Tina Mariane Krogh Madsen and Malte Steiner) taking

its departure point in the artists own field recorded samples of water. The composition is created through analyzing

different material aspects of water using these data both concretely and conceptually, as a vital material. The piece is

live coded in TidalCycles, where each sample used is created uniquely for this performance. Through the use of a multi-channel

set-up the goal is to create an immersive and dynamic listening experience and in this way create sonic as well as environmental

awareness. Additionally the performance will explore the collaborative and experimental potentials of live coding.

untitled gibberings

This performance will be one of the first to use a new revision of Gibber, in which almost the entirety of the environment

was rewritten from scratch. One advantage of this new version is that audio now runs in its own thread, which enables

greater use of dynamic annotations and visualizations to display the state / progression of running algorithms. Additionally,

new “virtual analog” filters provide better sound quality when compared to previous iterations, along with many other sonic

improvements.

The performance itself cycles through a few different phases over its duration, beginning with algorithmically manipulating

a simple yet aggressive arpeggiated bass, transitioning to a of an ambient phase, and concluding with a barrage of percussion,

noise samples, and glitch effects.

LiveSpoken : Cyphering Thru Livecoding

LiveSpoken : Cyphering Thru Livecoding is a presentation and performance of how live-coding can transcend the screen.

Combining it with the improvisational nature of the cypher, commonly seen in the hip-hop community. In this performance,

not only will the code be improvised in sonic pi but the song writing and vocal elements will also be improvised so the

audience can witness some of the stages that goes into a freestyle session.